Hello!

For this project, my first plan was to write and train my own neural network and I used that for my first "progress documentation" but then I had to change my entire plan since I realized that what I had planned was too complicated and too time consuming for me to finish.

This is how far I went with plan A:

Anyways, I am glad that I got a little bit of experience out of that. I learned a couple of things while writing that code with the help of Daniel Shiffman's YouTube videos (Coding Train).

Now Plan B:

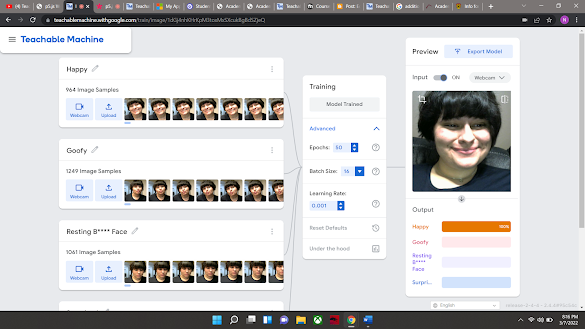

I decided to use Teachable Machine and started an image project. My goal was to train the machine to read facial expressions.

I went the wrong direction at first, by uploading images instead of using my webcam. I gave the machine many googled pictures of people with different facial expressions (including stock photos and pictures of celebrities)

I realized that the machine was even able to recognize facial expressions in cartoony pictures:

and the most EXCITING part was the fact that it could also work on a few dog pictures:

Until, I started to use my webcam just to realize that the machine can only achieve one result(instead of it giving me continuous results). After troubleshooting, I found out that the reason behind that problem was the fact that I gave the machine images of random people (that obviously were not recorded with my webcam) therefore, it could not understand or read a changing/moving face.

So....

I had to do it all over again (YAYYY). This time I used my webcam and recorded myself. Even though it was hard to smile for classifying "happiness" since I was pretty disappointed at wasting my own time TWICE

I also ended up deleting a few of the facial expressions from my list since a few of them were similar to each other and were a little too confusing for the machine to understand.

A few things that were challenging yet I managed to fix were:

- "Disgusted" facial expression was getting confused with "Surprised" I ended up deleting the Disgusted class.

- "Duck Face" was getting confused with "Surprised" I ended up adding Duck Faced data to the "Goofy" class.

- "Happy" and Resting B**** Face (poker face) were getting confused as well. In order to fix this problem, I had to redo classifying "Happy" again, since my face in its resting phase was getting confused as "Happy".

I learned that the angles of the data taken by my webcam matter a lot! I am planning on training this once more while installing my project at The Space in Between.

Coding Train's video on teachable machine guided me to the right direction in order to bring this whole thing into my project using p5.js and ml5.

I also learned how to use emojis in this project which was a nice touch!

I borrowed my brother's face to make sure if me and the teachable machine are still on the same page

Overall, everything was worth it, I am so happy with the final results!

Questions I was able to find answers to:

- How can I achieve continuous results instead of one?

- Will this machine give the same results to my family member's facial expressions?

- Would this machine work on dogs? (for fun)

Questions I currently have:

- Would this machine work on a person from a different race? to what extent can this machine understand our diversity? how important is the data and the training process?

- Would this trained project work when installed or would it require further training to recognize my face in a different setting/lighting/angle.

Question regarding the installation process:

- How can I make the viewer engage with my work?

Update: Final Documentation:

Critique last week went great! I am glad I went super early to figure everything out for the presentation, also I am very thankful of everyone who gave me ideas and feedback in order to improve my work for the critique!

Struggle 1 :

Space in between's huge projector and webcam:

I ended up not using the projector for my presentation since the webcam was huge and focused on the entire room rather than people's face.

Solution 1:

Using my laptop to present on a table, while a chair is also placed for people to come and sit down one by one to interact with my work.

Why I think this worked out really well? Because I trained my model while sitting on the chair, therefore, the model has a more "focused" and more restricted understanding of distance in order to detect facial expressions. This increased the chances of the trained model recognizing faces better, since I trained it many times again while giving it the specific distance and angle (from my laptop's screen to the person's face who is sitting there).

Struggle 2:

I had many facial expressions and emojis eliminated in order to make it more "straightforward" for the model to understand and recognize, but then with the feedback that I got, I ended up adding new "classes" to my model again! Since people who would try to interact with the work would probably try more than just four expressions.

Solution 2:

the final list of facial expressions:

1. Happy

2.Goofy

3.Duck Face

4.Neutral

5.Surprised

6.Sad

7.Upset

AND

8.Take That Mask Off (more on this one below)

Struggle 3:

A question that I still had from previous week was how to make the viewer engage with the work?

Solution 3:

Well since we wear masks at school, I decided to add the mask option as well, and instead of labelling it in my code as an emotion, I left a message asking the viewer who just sat down to use the laptop to take their masks off. This way, the viewer will get an idea of what this model is about and how they can go further and try different things with it.

Overall, I found solutions to my questions from the previous week, the only question left was :

Would this machine work on a person from a different race? to what extent can this machine understand our diversity? how important is the data and the training process?

And to answer this, I am going to list a few observations:

- the model works the best on MY face, since I have trained it using only my own face.

- It works on my brother's face, but my brother obviously is similar to me complexion/race wise, the only difference would have been our genders but it still worked.

- It also works fine (not perfect) on people with similar complexion to me.

- it also worked and did not mind different hair colors, but for sure got confused with skin tones and faces that were not similar to mine.

- Our diversity is very important for going forward with technology. In order for the audience from all around the world to be able to interact with similar work, it is very important that we create something that can understand this as well, by giving it the right data while training it. Unfortunately I could not train my model to be more diverse since my laptop went through so much pain in order to train my model multiple times using ONLY my face, I cannot imagine what would happen to my laptop if I included all kinds of diversity in my training. This is not an excuse for companies like Apple or anything though.

I learned a ton doing this project not only from the class and from Paul, but also other students and Coding Train. Thank you everyone!

I also had a lot of fun!

No comments:

Post a Comment